Resolution is one of the most familiar specs in TVs, monitors, cameras, streaming devices, and consoles. But terms like “4K” and “8K” can be confusing: are they just marketing words, or do they meaningfully change what you see? In this guide we explain what 4K and 8K actually are, how they differ, when your eyes can perceive the difference, the content and bandwidth you need, and which option makes more sense in real-world use.

Definitions in plain language

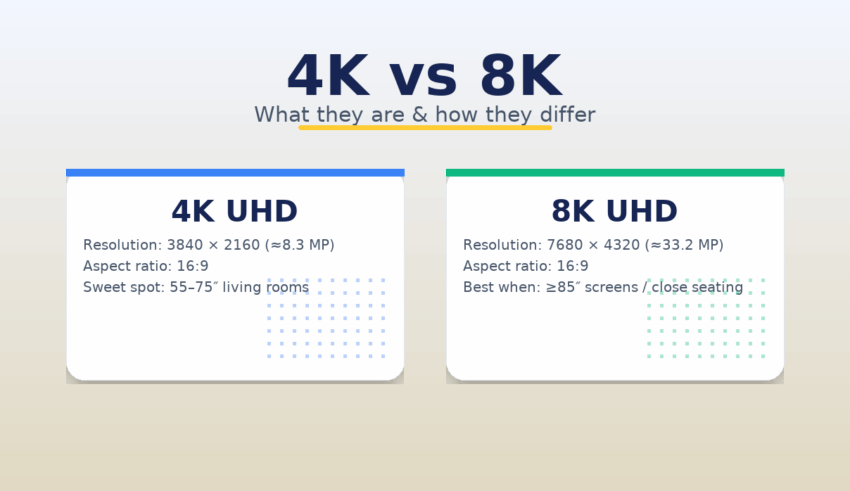

4K (UHD) on consumer TVs is 3840×2160 pixels—about 8.3 megapixels—with a 16:9 aspect ratio. In cinema production you may also see DCI-4K at 4096×2160, a slightly wider frame used in theaters.

8K (UHD) is 7680×4320 pixels—about 33.2 megapixels—also 16:9 on consumer displays. Compared with 4K, 8K has four times the pixel count.

4K vs 8K at a glance

- Resolution: 4K = 3840×2160; 8K = 7680×4320.

- Pixel count: 4K ≈ 8.3 million; 8K ≈ 33.2 million (4× more).

- Aspect ratio: both are typically 16:9 on TVs.

- HDR: available on both; panel quality and brightness often matter more than raw pixel count.

- Content availability: 4K = widespread; 8K = limited, often upscaled.

- Hardware/bandwidth: 8K needs faster connections, more storage, and more GPU power.

Can you actually see the difference?

Perceived sharpness depends on a triangle of factors: screen size, viewing distance, and visual acuity. At typical sofa distances (about 2–3 m), many people can tell 4K from 1080p on screens of roughly 50–55 inches and larger. Seeing a clear advantage for 8K generally requires very large screens (≈75–85 inches or more) and/or sitting closer than usual. This is why you often notice 8K demo content in showrooms where viewers stand quite near giant displays.

Another key variable is source quality. A well-shot, high-bit-rate 4K master can look better than a heavily compressed 8K stream. And while modern TVs upscale lower-resolution video surprisingly well using sophisticated processing and even AI, upscaling cannot create true detail that wasn’t captured.

Content and bandwidth: where 4K wins today

4K is mature: major streaming services host extensive 4K libraries, UHD Blu-ray offers pristine reference quality, and next-generation consoles output 4K with HDR. By contrast, native 8K content is still scarce. You’ll find tech demos, a handful of YouTube/streaming samples, and a small set of specialty productions. Most 8K TVs therefore rely on upscaling ordinary HD/4K to fill the panel.

Higher resolution increases data needs. Typical 4K streaming targets around 15–25 Mbps (depending on the service, bitrate strategy, and codec). Delivering truly clean 8K demands a multiple of that bandwidth plus efficient codecs like HEVC or AV1, along with robust ISP and home-network performance.

Hardware requirements and connectivity

- HDMI: 4K60 works on HDMI 2.0; 4K120, VRR and 8K60 require HDMI 2.1 support on both the TV and source device.

- Streaming devices: Ensure the streamer supports the desired resolution, HDR format (HDR10, Dolby Vision, HLG), and codec (HEVC/AV1).

- Storage: Editing or archiving 8K footage consumes storage quickly and stresses CPU/GPU resources compared with 4K.

Gaming: frame rate vs pixels

Modern consoles focus on 4K with high frame rates (up to 120 Hz) and HDR. True 8K gaming is rare and typically requires a top-tier PC GPU, with compromises to rendering quality or frame rate. For responsiveness and clarity, many gamers prefer 4K120 over 8K60. If you upgrade your display for gaming, prioritise HDMI 2.1 features (4K120, VRR/FreeSync, ALLM) and low input lag before targeting 8K.

Production and professional use

Creators often capture in 6K or 8K even when delivering in 4K. The extra pixels allow for reframing, stabilisation, and VFX without quality loss. However, 8K dramatically increases workflow demands: faster storage, more RAM, robust GPUs, and longer render times. Unless your pipeline or client requirements explicitly need 8K, high-quality 4K remains a practical standard for most productions.

HDR, contrast and color—often a bigger upgrade than resolution

Resolution grabs headlines, but the biggest visual jump often comes from HDR performance (peak brightness, local dimming/contrast, and wide color gamut). A high-quality 4K HDR TV with excellent brightness and contrast can look more impressive than a budget 8K model with mediocre HDR. If movie nights and sports are your priority, panel quality beats pixel quantity.

When does 8K make sense?

- Very large screens (≈85″+) and close seating where 8K detail can be resolved.

- Specialised workflows (e.g., high-end post-production, exhibitions, installation art) that benefit from ultra-high-resolution assets.

- Future-proofing in environments where budget and bandwidth are ample and native 8K content is expected to become available.

When is 4K the smarter buy?

- You sit 2–3 m from a 55–75″ screen in a typical living room.

- You want the best HDR picture quality for the price today.

- You primarily watch streaming, broadcast, or UHD Blu-ray, and play console games.

- You prefer higher frame rates for fast sports and gaming over chasing more pixels.

Practical checklist before you buy

1) Room and seating

Measure your viewing distance. If you’re 2–3 m from the screen, 4K on a 65–75″ TV will already look crisp; 8K’s benefits are subtle unless you go very large or sit closer.

2) Content mix

List what you actually watch: streaming platforms, live TV, sports, UHD discs, and games. If 95% of your content is 4K or lower, the panel’s HDR performance and motion handling matter more than 8K resolution.

3) Connectivity and bandwidth

Check your internet speed and home network. For rock-solid 4K, aim for at least 25 Mbps. If you plan to chase 8K demos, you’ll need significantly more bandwidth and compatible codecs (HEVC/AV1).

4) Longevity and firmware

Choose brands that provide good firmware support, codec updates, and app performance over time. That matters more day-to-day than the jump from 4K to 8K for most users.

Environmental and cost considerations

Higher-resolution pipelines—from capture to playback—consume more energy and storage. An excellent 4K setup is not only less expensive but typically more efficient. If sustainability matters, focus on a well-calibrated 4K display with good HDR rather than pushing for 8K right now.

Bottom line

4K is the current sweet spot: abundant native content, manageable bandwidth, strong gaming support, and great picture quality—especially with HDR on a high-quality panel. 8K delivers remarkable pixel density, but its real-world advantages appear mostly on very large screens at close distances and with top-tier source material. For most homes, the smarter upgrade is a better 4K HDR TV with HDMI 2.1 and accurate picture modes, rather than chasing more pixels.